What are Tokens?

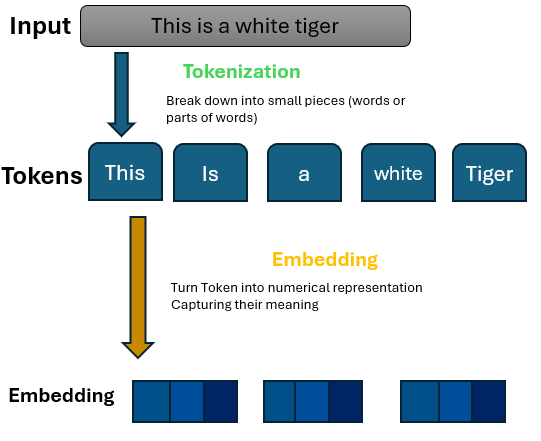

In the context of Large Language Models (LLMs), tokens are the fundamental units of text that the model processes. Think of tokens as the “atoms” of language models – they’re the smallest building blocks that LLMs understand and generate.

Tokens are not always equivalent to words. Depending on the tokenization approach used, a token could represent:

- A single character

- A subword (part of a word)

- A complete word

- Punctuation marks

- Special symbols

- Whitespace characters

For example, the sentence “I love machine learning!” might be tokenized as: [“I”, “love”, “machine”, “learning”, “!”] or [“I”, ” love”, ” machine”, ” learn”, “ing”, “!”] depending on the tokenization method.

Why Tokenization Matters

Tokenization is crucial for several reasons:

- Vocabulary Management: LLMs have finite vocabularies (typically between 30K-100K tokens). Effective tokenization allows models to represent a vast range of words and expressions with a limited vocabulary.

- Handling Unknown Words: Good tokenization strategies can break down unfamiliar words into familiar subword units, enabling models to handle words they’ve never seen before.

- Efficiency: The length of text sequences directly impacts computational requirements. Efficient tokenization reduces the number of tokens needed to represent text.

- Model Performance: The quality of tokenization significantly affects how well LLMs understand and generate text, especially for non-English languages or specialized domains.

Common Tokenization Methods

1. Byte Pair Encoding (BPE)

BPE is one of the most widely used tokenization methods in modern LLMs, employed by models like GPT-2, GPT-3, and GPT-4.

How it works:

- Start with a basic vocabulary containing individual characters

- Count the frequency of adjacent character pairs in the training corpus

- Iteratively merge the most frequent pairs into new tokens

- Continue this process until reaching a desired vocabulary size

BPE effectively balances between character-level and word-level tokenization, creating a vocabulary of subword units that efficiently represents the language.

A key variant is byte-level BPE, which works directly with UTF-8 bytes rather than Unicode characters. This ensures that any possible character can be represented (even those not seen during training), avoiding the “unknown token” problem.

2. WordPiece

WordPiece is the tokenization algorithm used by BERT, DistilBERT, and Electra.

How it works:

- Similar to BPE, it starts with a character-level vocabulary

- Instead of merging based purely on frequency, it selects pairs that maximize the likelihood of the training data

- It uses a special prefix (typically “##”) to mark subword tokens that don’t start a word

For example, “unhappy” might be tokenized as [“un”, “##happy”] in WordPiece.

3. SentencePiece

SentencePiece is a language-independent tokenizer used by models like T5, XLNet, and ALBERT.

What to know:

- Treats input text as a raw stream of Unicode characters, including spaces

- Space is preserved as a special symbol (typically “▁”)

- Can implement either BPE or Unigram Language Model algorithms

- Eliminates the need for language-specific pre-tokenization

- Particularly effective for languages without explicit word boundaries (like Japanese or Chinese)

4. Unigram

Unigram is often used together with SentencePiece and takes a different approach:

How it works:

- Start with a large vocabulary of possible subword candidates

- Iteratively remove tokens that least affect the model’s ability to represent the training data

- Uses a probabilistic model to find the most likely segmentation of words

Tokens and Context Windows

LLMs have a limited “context window” – the maximum number of tokens they can process at once. This limit directly affects:

- The length of input text the model can consider

- How much text the model can generate in one go

- The model’s ability to maintain coherence across long passages

Recent advancements have extended context windows from around 2,048 tokens (GPT-3) to 8,000+ tokens (GPT-4), with some specialized models reaching 32,000 tokens or more.

Practical Implications of Tokenization

Token Counting

Understanding token counts is important for:

- Estimating API costs (many LLM APIs charge per token)

- Staying within context window limits

- Optimizing prompt design

As a rough estimate for English text (this varies!)

- 1 token ≈ 4 characters

- 1 token ≈ 0.75 words

- 100 tokens ≈ 75 words or ~1 paragraph

Tokenization Quirks

Tokenization can lead to some unexpected behaviors:

- Non-English Languages: Many LLMs tokenize non-English text inefficiently, using more tokens per word than English.

- Special Characters: Unusual characters, emojis, or specific formatting might consume more tokens than expected.

- Numbers and Code: Some tokenizers handle numbers and programming code in counter-intuitive ways, breaking them into multiple tokens.

- Whitespace Sensitivity: Adding or removing spaces can change how text is tokenized and potentially alter model behavior.

Conclusion

Tokenization is a fundamental aspect of how LLMs function. The choice of tokenization strategy impacts an LLM’s capabilities, efficiency, and performance across different languages and domains. As LLMs continue to evolve, advancements in tokenization will play a role in their improvement.