This is the second part of the Caching Mastery Roadmap, explaining common caching policies, algorithms, and deployments simply.

This article explores different caching policies like Least Recently Used (LRU), Least Frequently Used (LFU), and Random Replacement (RR).

It also goes over different caching deployments, including in-process, inter-process, and remote caching, with real-world examples.

Caching Algorithms

Least Recently Used (LRU)

LRU is a popular caching algorithm that discards the least recently used items first. This algorithm assumes that data accessed recently will likely be accessed again soon. LRU is effective in situations where the pattern of data access is relatively stable.

An example demonstrating how LRU is implemented:

class Node:

def __init__(self, key, value):

self.key = key

self.value = value

self.prev = None

self.next = None

class LRUCache:

def __init__(self, capacity: int):

self.capacity = capacity

self.cache = {} # Map keys to nodes

# Dummy nodes to simplify edge case handling

self.head = Node(0, 0)

self.tail = Node(0, 0)

self.head.next = self.tail

self.tail.prev = self.head

def get(self, key: int) -> int:

node = self.cache.get(key)

if not node:

return -1

self._move_to_front(node)

return node.value

def put(self, key: int, value: int) -> None:

if key in self.cache:

self._remove_node(self.cache[key])

node = Node(key, value)

self.cache[key] = node

self._add_node(node)

if len(self.cache) > self.capacity:

tail = self.head.next

self._remove_node(tail)

del self.cache[tail.key]

def _add_node(self, node):

# Add to the front (just before tail)

node.next = self.tail

node.prev = self.tail.prev

self.tail.prev.next = node

self.tail.prev = node

def _remove_node(self, node):

# Remove from the list

prev_node = node.prev

next_node = node.next

prev_node.next = next_node

next_node.prev = prev_node

def _move_to_front(self, node):

# Move a node to the front (just before tail)

self._remove_node(node)

self._add_node(node)

# Example usage

cache = LRUCache(2)

cache.put(1, 1)

cache.put(2, 2)

print(cache.get(1)) # returns 1

cache.put(3, 3) # evicts key 2

print(cache.get(2)) # returns -1 (not found)

cache.put(4, 4) # evicts key 1

print(cache.get(1)) # returns -1 (not found)

print(cache.get(3)) # returns 3

print(cache.get(4)) # returns 4An example demonstrating how LRU works:

from functools import lru_cache

import time

@lru_cache(maxsize=3)

def expensive_operation(x):

# Simulating a time-consuming computation

print(f"Computing for {x}...")

time.sleep(2)

return x * x

# Using the cached function

print(expensive_operation(2)) # Cache miss, computes and stores the result

print(expensive_operation(3)) # Cache miss, computes and stores the result

print(expensive_operation(4)) # Cache miss, computes and stores the result

# This call will result in a cache hit, and will return the stored result immediately

print(expensive_operation(2))

# Adding another call, this will cause the cache for '2' to be evicted as the cache can only hold 3 items

print(expensive_operation(5))

# This call will be a cache miss, since the result for '2' was evicted earlier

print(expensive_operation(2))

Least Frequently Used (LFU)

LFU algorithm removes the least frequently accessed data. Unlike LRU, LFU considers access frequency rather than the recency of access. This approach is beneficial when the importance of data is determined by how often it is accessed, rather than when it was last accessed.

An example demonstrating how LFU is implemented:

class LFUCache:

def __init__(self, capacity: int):

self.capacity = capacity

self.min_freq = 0

self.key_to_val_freq = {} # Maps key to (value, frequency)

self.freq_to_keys = defaultdict(OrderedDict) # Maps frequency to keys in order of usage

self.size = 0

def get(self, key: int) -> int:

if key not in self.key_to_val_freq:

return -1

# Increase the frequency of the key

val, freq = self.key_to_val_freq[key]

del self.freq_to_keys[freq][key]

if not self.freq_to_keys[freq]:

del self.freq_to_keys[freq]

if self.min_freq == freq:

self.min_freq += 1

self.freq_to_keys[freq + 1][key] = None

self.key_to_val_freq[key] = (val, freq + 1)

return val

def put(self, key: int, value: int) -> None:

if self.capacity == 0:

return

if key in self.key_to_val_freq:

# Update the value and increase frequency

_, freq = self.key_to_val_freq[key]

self.key_to_val_freq[key] = (value, freq + 1)

del self.freq_to_keys[freq][key]

if not self.freq_to_keys[freq]:

del self.freq_to_keys[freq]

if self.min_freq == freq:

self.min_freq += 1

self.freq_to_keys[freq + 1][key] = None

else:

# Check if the cache is full

if self.size == self.capacity:

# Evict the least frequently used key

evict_key, _ = self.freq_to_keys[self.min_freq].popitem(last=False)

del self.key_to_val_freq[evict_key]

self.size -= 1

# Add the new key

self.key_to_val_freq[key] = (value, 1)

self.freq_to_keys[1][key] = None

self.min_freq = 1

self.size += 1

# Example usage

lfu_cache = LFUCache(2)

lfu_cache.put(1, 1)

lfu_cache.put(2, 2)

print(lfu_cache.get(1)) # returns 1

lfu_cache.put(3, 3) # evicts key 2

print(lfu_cache.get(2)) # returns -1 (not found)

print(lfu_cache.get(3)) # returns 3

lfu_cache.put(4, 4) # evicts key 1

print(lfu_cache.get(1)) # returns -1 (not found)

print(lfu_cache.get(3)) # returns 3

print(lfu_cache.get(4)) # returns 4Least Recently Updated (LRUp)

This variant of LRU focuses on the time since the data was last updated rather than when it was last accessed. It’s useful in scenarios where the freshness of data is more critical than its access frequency.

Random Replacement (RR)

Random Replacement (RR) is a caching algorithm used in the management of cache memory. Unlike other caching algorithms that use specific criteria for cache item eviction, Random Replacement selects a random item for eviction when the cache is full and needs space for new items.

import random

class RRCache:

def __init__(self, capacity: int):

self.capacity = capacity

self.cache = {}

self.keys = []

def get(self, key: int) -> int:

return self.cache.get(key, -1)

def put(self, key: int, value: int) -> None:

if key not in self.cache and len(self.keys) == self.capacity:

# Randomly select a key for eviction

evict_key = random.choice(self.keys)

self.cache.pop(evict_key)

self.keys.remove(evict_key)

self.cache[key] = value

if key not in self.keys:

self.keys.append(key)

# Example usage

rr_cache = RRCache(3)

rr_cache.put(1, 1)

rr_cache.put(2, 2)

rr_cache.put(3, 3)

print(rr_cache.get(1)) # returns 1

rr_cache.put(4, 4) # randomly evicts a key

print(rr_cache.get(2)) # might return -1 if key 2 was evicted

print(rr_cache.get(4)) # returns 4Eviction Policies

Caching systems often need to make room for new data by evicting existing data, and they use various eviction policies to decide which data to remove:

- Capacity-based Eviction: Data is removed when the cache reaches its maximum capacity.

- Examples: LRU, LFU, RR

- Time-based Eviction: Involves removing data after a certain period, regardless of its use. TTL (Time-To-Live) is a common time-based eviction policy.

- Fixed TTL: Each cache entry is given a fixed lifespan when it is added to the cache. After the TTL expires, the data is automatically evicted during the next cleanup cycle.

- Sliding TTL: The TTL is reset every time the cache entry is accessed, which keeps frequently accessed data longer in the cache, potentially reducing the load on the underlying data source.

- Priority-based Eviction: Some caching systems assign priorities to data items. When there’s a need for eviction, items with the lowest priority are removed first. Potential prioritization criteria:

- Data criticality: More important data, such as financial records or real-time analytics, can be assigned higher priorities.

- Cost of retrieval: Data that is expensive or slow to retrieve from the original data source may be given a higher priority to remain in the cache.

- User-defined rules: Priorities can also be set based on specific business rules or operational requirements, allowing more control over what data stays in the cache.

Different Types of Caching: In-Process, Inter-Process, and Remote Cache

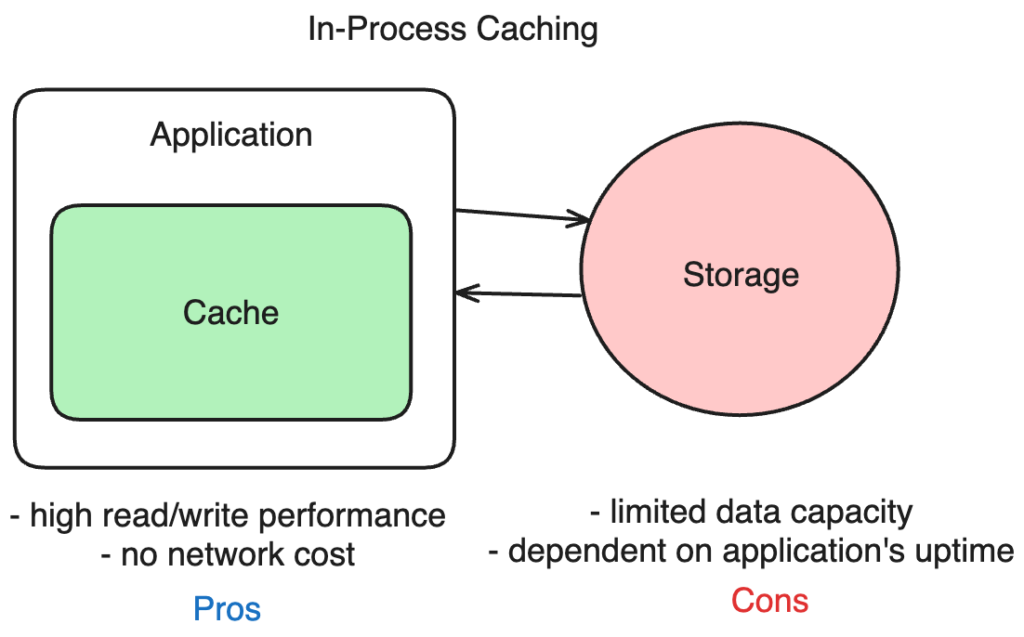

In-Process Cache

In-process caching refers to a cache that resides within the same process as the application.

It involves storing data directly in the application’s memory space, making it extremely fast since it eliminates the need for network calls or inter-process communication.

Advantages

- High Speed: As the cache is stored in the application’s memory, data retrieval is rapid.

- Ease of Implementation: Being part of the application, it’s often straightforward to implement and manage.

- Zero Network Overhead: Since it doesn’t require network communication, it eliminates related latency and bandwidth issues.

Disadvantages

- Limited Scalability: The cache size is limited by the application’s memory, restricting how much data can be stored.

- Data Volatility: If the application restarts or crashes, the cached data is lost.

Use Cases

An in-process cache is ideal for standalone applications or services where data access speed is crucial, and the data set is relatively small.

Here’s a code example:

# In-Process Cache Implementation Example

def expensive_operation(argument):

# Simulate a time-consuming operation

print(f"Computing result for {argument}")

return argument * 2

class InProcessCache:

def __init__(self):

self.cache = {}

def get(self, key):

return self.cache.get(key)

def set(self, key, value):

self.cache[key] = value

# Instantiate the cache

cache = InProcessCache()

def cached_expensive_operation(argument):

# Check if the result is already in the cache

if (cached_result := cache.get(argument)) is not None:

print(f"Cache hit for {argument}")

return cached_result

# If not in cache, compute and store the result

result = expensive_operation(argument)

cache.set(argument, result)

return result

# Example usage

print(cached_expensive_operation(10)) # Will compute and cache

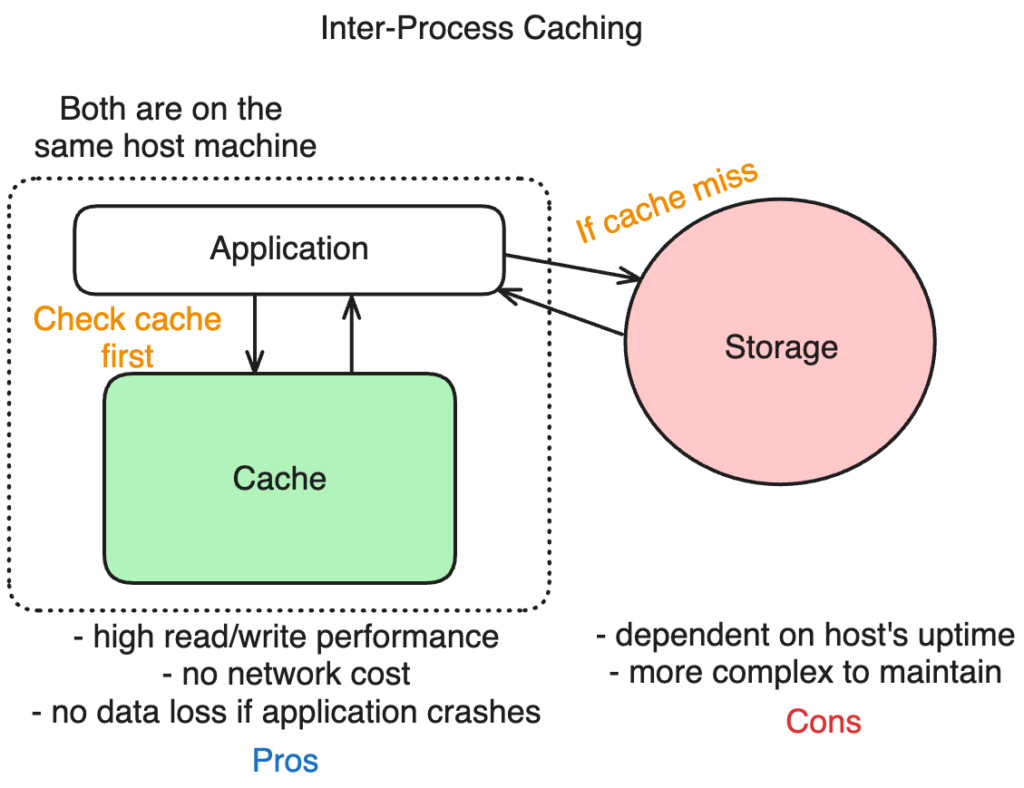

print(cached_expensive_operation(10)) # Will fetch from cacheInter-Process Cache

An inter-process cache operates in a separate process on the same machine as the application. They are deployed locally on the machine.

It’s a standalone system that communicates with the application through inter-process communication mechanisms.

Examples of inter-process caches include Memcached, RocksDB, and Redis in standalone mode.

Advantages

- Isolation: Separating the cache from the application process can improve stability and security.

- Persistence: Cached data can persist even if the application restarts.

- Fast Access: While slower than in-process cache, it still provides quick access with minimal network latency.

Disadvantages

- Complexity: Requires more complex setup and management compared to in-process caching.

- Potential for Data Loss: If the machine hosting the cache fails, the data might be lost unless replicated elsewhere.

Use Cases

Inter-process cache is suitable for applications that need a balance between speed and data persistence and can manage slightly more complexity in cache management.

Here’s a code example:

import redis # Assuming we're using redis and it's setup locally.

import time

def expensive_operation(argument):

# Simulate a time-consuming operation

print(f"Computing result for {argument}")

time.sleep(2) # Simulating delay

return argument * 2

# Connect to Redis server

redis_client = redis.Redis(host='localhost', port=6379, db=0)

def cached_expensive_operation(argument):

# Convert argument to a string to use as a key

key = f"expensive_operation:{argument}"

# Try to get the cached value

cached_value = redis_client.get(key)

if cached_value is not None:

print(f"Cache hit for {argument}")

return int(cached_value)

# If not cached, compute and store the result

result = expensive_operation(argument)

redis_client.set(key, result)

return result

# Example usage

print(cached_expensive_operation(10)) # Will compute and cache

print(cached_expensive_operation(10)) # Will fetch from cache

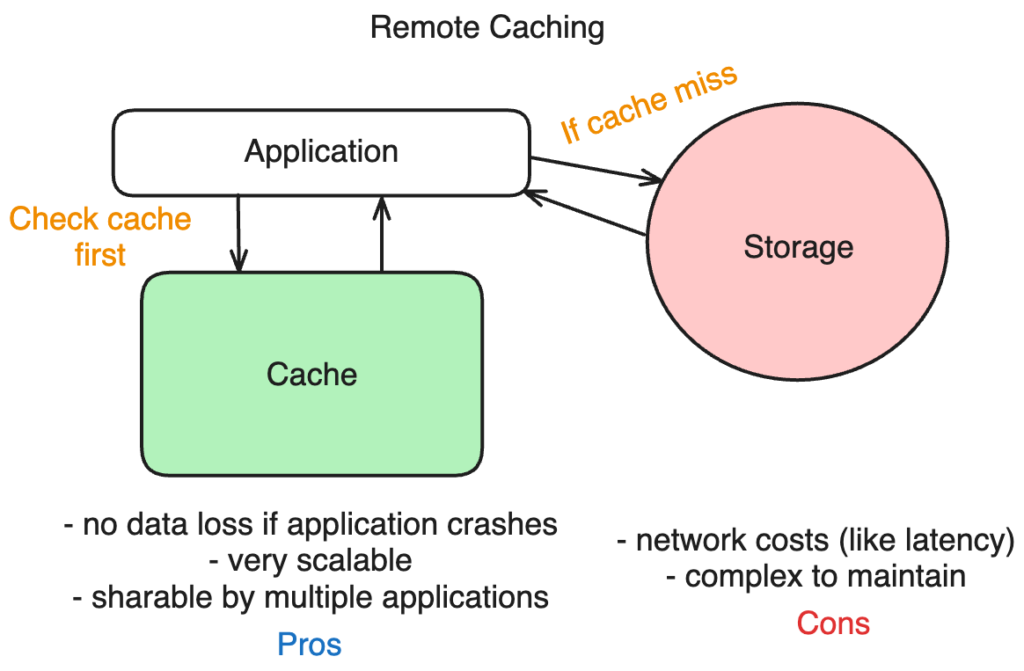

Remote Cache

Remote cache is hosted on a separate machine or cluster of machines, distinct from the application server. It’s designed for distributed environments and can be shared across multiple applications.

Examples of remote caches include Memcached and Redis deployed on remote servers.

A real-world example is Instagram, who scaled to 14 million users with only 3 engineers, and they used Memcached.

Another example is Facebook, who created the largest Memcached system in the world. This allowed them to handle billions of requests per second efficiently.

Advantages

- Scalability: Can handle larger datasets and more users, as it’s not limited by a single application’s resources.

- High Availability: Often designed for fault tolerance and can replicate data across multiple nodes.

- Shared Resource: Multiple applications can use the same cache, optimizing resource usage.

Disadvantages

- Network Latency: Data retrieval is slower due to network communication overhead.

- Complexity: Requires more sophisticated configuration and maintenance.

Use Cases

Remote cache is ideal for distributed systems, microservices architectures, and applications where cache resources need to be shared across different components or services.

Here is a code example:

import redis

import time

def expensive_operation(argument):

# Simulate a time-consuming operation

print(f"Computing result for {argument}")

time.sleep(2) # Simulating delay

return argument * 2

# Connect to the remote Redis server

# Replace 'remote_host_ip' with the IP address of your remote Redis server

# Replace 'remote_port' with the port number (default Redis port is 6379)

# If your Redis server requires authentication, add password='yourpassword' to the Redis constructor

redis_client = redis.Redis(host='remote_host_ip', port=remote_port, db=0)

def cached_expensive_operation(argument):

# Convert argument to a string to use as a key

key = f"expensive_operation:{argument}"

# Try to get the cached value

cached_value = redis_client.get(key)

if cached_value is not None:

print(f"Cache hit for {argument}")

return int(cached_value)

# If not cached, compute and store the result

result = expensive_operation(argument)

redis_client.set(key, result)

return result

# Example usage

print(cached_expensive_operation(10)) # Will compute and cache

print(cached_expensive_operation(10)) # Will fetch from cache

Note that the main difference is that we are connecting to a remote Redis host here and not a locally deployed one.