Since the creation of the web, a multitude of technologies have aimed to streamline client-server communication. Application Programming Interfaces (APIs) have played a pivotal role in this evolution. However, traditional approaches like SOAP, JSON-RPC, and even RESTful services, while valuable, come with inherent limitations: tight coupling between components, processing inefficiencies, header overhead, and challenges with interoperability, to name a few. To address these issues within its own infrastructure, Google initiated the “Stubby” project in 2001. gRPC, an evolution of this internal project, was released to the public as open-source software in 2015 and later embraced by the Cloud Native Computing Foundation (CNCF) in 2017.

Decoding gRPC: A High-Performance RPC Framework

gRPC sets itself apart by harnessing the power of HTTP/2. It leverages HTTP/2’s multiplexing capabilities to establish logical subchannels, enabling various connection types:

- Request-Response: A classic one-to-one exchange, where the client sends a request and the server responds.

- Client Streaming: The client sends a stream of multiple requests, and the server sends a single response when the client is done.

- Server Streaming: The client sends a single request, and the server responds with a stream of messages.

- Bidirectional Streaming: Both client and server can send streams of messages to each other independently.

The intricate management of these HTTP/2 streams and subchannels remains hidden within gRPC, providing a simplified experience for application developers.

graph LR

subgraph Client

A[Client Application]

B[gRPC Client Stub]

end

subgraph "gRPC Framework"

C{Serialization/Deserialization}

D{HTTP/2 Transport}

end

subgraph Server

E[gRPC Server Stub]

F[Server Application]

end

A -->|1. Method Call| B

B -->|2. gRPC Request| C

C -->|3. Serialized Data| D

D -->|4. HTTP/2 Stream| D

D -->|5. Serialized Data| C

C -->|6. gRPC Response| E

E -->|7. Method Return| F

classDef client fill:#f9f,stroke:#333,stroke-width:2px;

classDef server fill:#9ff,stroke:#333,stroke-width:2px;

classDef grpc fill:#ff9,stroke:#333,stroke-width:2px;

class A,B client;

class E,F server;

class C,D grpc;At its core, gRPC follows the fundamental principles of RPC (Remote Procedure Call). A client invokes a procedure as if it were local; gRPC handles the communication with the server, executing the procedure remotely and returning the result. However, gRPC significantly enhances this process by using HTTP/2 streams and other optimizations.

A key element in gRPC‘s performance is its use of Protobuf (Protocol Buffers) as the default Interface Definition Language (IDL). Protobuf enables efficient serialization of data into a compact binary format, which is then further optimized by compressing headers. gRPC also automates the generation of client and server code stubs, speeding up development and simplifying maintenance.

Key Point: While the core of gRPC is implemented in C, Java, and Go, it supports a wide range of programming languages through wrappers around this core, making it suitable for diverse development environments.

Inside the gRPC Engine: Understanding the Components

Before we examine the communication flow, let’s familiarize ourselves with some key gRPC components:

- Channel: Think of a channel as a dedicated HTTP/2 connection to a specific endpoint on the server. Clients can configure default channel properties, such as enabling or disabling compression.

- Subchannel: Within a channel, subchannels represent logical groupings of HTTP/2 streams. Each subchannel represents a connection between the client and a specific server instance, enabling load balancing across multiple servers. A single channel can have multiple subchannels.

graph TB

A[gRPC Client] --> B[gRPC Channel]

B --> C[Load Balancer]

C --> D[Subchannel 1]

C --> E[Subchannel 2]

C --> F[Subchannel 3]

D --> G[Backend Server 1]

E --> H[Backend Server 2]

F --> I[Backend Server 3]

subgraph "Client Side"

A

B

C

end

subgraph "Network"

D

E

F

end

subgraph "Server Side"

G

H

I

end

classDef client fill:#f9f,stroke:#333,stroke-width:2px;

classDef channel fill:#ff9,stroke:#333,stroke-width:2px;

classDef subchannel fill:#9ff,stroke:#333,stroke-width:2px;

classDef server fill:#9f9,stroke:#333,stroke-width:2px;

class A client;

class B,C channel;

class D,E,F subchannel;

class G,H,I server;gRPC also provides optional, pluggable components for added flexibility:

- DNS Resolver: This component resolves domain names (e.g., api.example.com) to IP addresses, potentially returning multiple IPs for load balancing purposes.

- Load Balancer: As the name suggests, the load balancer distributes incoming requests across available backend servers based on pre-configured policies, ensuring optimal resource utilization and scalability.

- Envoy Proxy: A popular intermediary server that allows clients using older HTTP/1.1 to communicate with gRPC servers running HTTP/2.

gRPC in Action: The Communication Cycle

gRPC excels at managing network connections and RPC calls. Let’s break down a typical connection lifecycle into 10 steps:

- The client initiates a request to call a remote procedure, based on the interface definition in the generated gRPC code.

- The generated code marshals (prepares) the data for transmission and instructs the gRPC framework to establish a connection.

- gRPC sends the target endpoint’s URL (included in the request metadata) to the DNS resolver.

- The DNS resolver returns a list of IP addresses for backend servers associated with that URL.

- gRPC provides this list to the load balancer, which selects the most appropriate backend server based on its configuration.

- gRPC initiates a connection to the selected backend server via the gRPC transport and returns a reference to a code stub that the client can use to send messages.

- The gRPC transport establishes an HTTP/2 stream over the network to the server’s listener.

- The listener on the server side receives the connection request and notifies the server-side gRPC.

- The server-side gRPC can accept or reject the connection. If accepted, it creates a subchannel for the client and returns a reference to this subchannel. Each subchannel has its own transport stream with separate read and write buffers for efficient data transfer.

- Communication between the client and server occurs over this established subchannel. Multiple RPC calls can be sent over a single subchannel, with each call potentially utilizing a separate stream.

sequenceDiagram

participant Client

participant Subchannel

participant Server

Note over Client,Server: Single gRPC Subchannel

Client->>+Subchannel: Initiate Connection

Subchannel->>+Server: Establish HTTP/2 Connection

Server-->>-Subchannel: Connection Established

Subchannel-->>-Client: Ready for RPCs

par RPC Call 1

Client->>+Subchannel: Stream 1: Request A

Subchannel->>+Server: Stream 1: Request A

Server-->>-Subchannel: Stream 1: Response A

Subchannel-->>-Client: Stream 1: Response A

and RPC Call 2

Client->>+Subchannel: Stream 2: Request B

Subchannel->>+Server: Stream 2: Request B

Server-->>-Subchannel: Stream 2: Response B

Subchannel-->>-Client: Stream 2: Response B

and RPC Call 3

Client->>+Subchannel: Stream 3: Request C

Subchannel->>+Server: Stream 3: Request C

Server-->>-Subchannel: Stream 3: Response C

Subchannel-->>-Client: Stream 3: Response C

end

Note over Client,Server: Multiple concurrent RPCs over single subchannel

rect rgb(240, 240, 240)

Note over Client,Server: HTTP/2 Multiplexing enables concurrent streams

endEach subchannel is dedicated to a specific client-server connection. If a new connection is needed, a new subchannel might be created, potentially to a different backend server, contributing to the system’s scalability.

Anatomy of a Remote Call: Key Operations

Now that we understand subchannel creation, let’s examine the basic operations within a gRPC remote call:

- Headers: The client initiates communication by sending headers containing metadata to the server. The server responds with its own headers, which include a reference to the newly established subchannel.

- Messages: The client sends a message containing the actual RPC request. The server processes this request and sends back a message containing the response. The number of messages exchanged can vary based on the connection type (unary, streaming).

- Half-Close: When finished sending requests, the client sends a half-close signal, indicating it’s now in receive-only mode and ready to close the connection once the server is done responding.

- Trailer: The server, after sending its response, can send a trailer containing additional information, such as server load or error details.

sequenceDiagram

participant Client

participant Server

Note over Client,Server: gRPC Remote Call Timeline

Client->>+Server: Headers (Metadata)

Server-->>-Client: Headers (Subchannel Reference)

Note over Client,Server: Connection Established

alt Unary RPC

Client->>Server: Message (Request)

Server-->>Client: Message (Response)

else Streaming RPC

loop Multiple Messages

Client->>Server: Message (Request)

Server-->>Client: Message (Response)

end

end

Client->>Server: Half-Close Signal

Note right of Client: Client enters receive-only mode

Server-->>Client: Trailer (Additional Info/Errors)

Note over Client,Server: Connection Closed

rect rgb(240, 240, 240)

Note over Client,Server: Timeline shows allowed operations for client and server

endIn a simple unary call (request-response), these operations happen sequentially: the server receives all client messages (headers, request, half-close) before sending its own (headers, response, trailer).

sequenceDiagram

participant Client

participant Server

Note over Client,Server: Unary gRPC Call

rect rgb(230, 255, 230)

Note right of Client: Client Operations

Client->>+Server: Headers (Metadata)

Client->>Server: Message (Request)

Client->>Server: Half-Close Signal

end

Note over Client,Server: Server processes request

rect rgb(255, 230, 230)

Note left of Server: Server Operations

Server-->>-Client: Headers (Metadata)

Server-->>Client: Message (Response)

Server-->>Client: Trailer (Additional Info)

end

Note over Client,Server: Call Completed

rect rgb(240, 240, 240)

Note over Client,Server: All client operations complete before server responds

endWeighing the Pros and Cons of gRPC

gRPC, under active development, offers several compelling advantages:

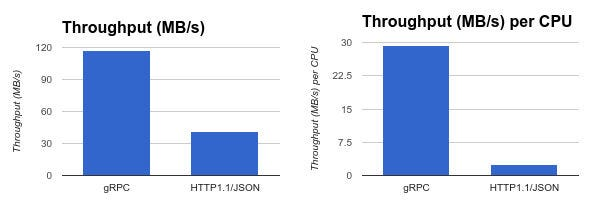

- Performance: gRPC is renowned for its speed and efficiency, attributed to its use of a binary protocol and its ability to leverage low-level transport optimizations. In certain benchmarks, gRPC has demonstrated significantly higher throughput compared to JSON/HTTP-based solutions.

- Code Generation and Contracts: gRPC uses machine-readable contracts defined with Protobuf. This enables automatic code generation for clients and servers in multiple languages from a single definition, ensuring consistency and reducing development time.

- Communication Flexibility: gRPC supports four communication patterns (unary, client streaming, server streaming, bidirectional streaming), making it adaptable to diverse application needs.

- Built-in Features: It provides built-in mechanisms for handling timeouts, retries, cancellations, and flow control, simplifying development.

- Extensibility: Its modular design with pluggable components (resolvers, load balancers, etc.) gives developers fine-grained control over the gRPC environment.

However, gRPC also has some limitations:

- Limited Browser Support: gRPC lacks direct support for web browsers, which typically use HTTP/1.1. Additional layers are needed to bridge this gap.

- Debugging Challenges: The use of a binary format, while efficient, can make debugging and inspecting messages more difficult compared to text-based protocols.

- Complexity and Overhead: While powerful, gRPC’s additional features and abstractions can add complexity and potential overhead, especially when compared to simpler REST/HTTP architectures.